| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| 29 | 30 | 31 |

- 알고리즘

- Overfitting

- object detection

- dropout

- back propagation

- NEXT

- c++

- 세그먼트 트리

- tensorflow

- 미래는_현재와_과거로

- 2023

- 자바스크립트

- 이분 탐색

- pytorch

- 가끔은_말로

- 가끔은 말로

- BFS

- 회고록

- 우선 순위 큐

- 플로이드 와샬

- 다익스트라

- dfs

- lazy propagation

- 크루스칼

- 문자열

- 백트래킹

- 조합론

- 분할 정복

- 너비 우선 탐색

- DP

- Today

- Total

Doby's Lab

DropPath란 무엇이며, Dropout과 무슨 차이가 있을까? (timm 활용 및 오픈소스 분석) 본문

DropPath란 무엇이며, Dropout과 무슨 차이가 있을까? (timm 활용 및 오픈소스 분석)

도비(Doby) 2024. 5. 12. 12:17🤔 Problem

비전 모델 오픈소스를 보다 보면, 종종 DropPath라는 클래스로부터 인스턴스를 생성하여 모델에서 사용하는 경우를 자주 봅니다. 또한, 이 DropPath를 사용하기 위해서는 timm이라는 라이브러리를 사용합니다.

그래서, 오늘은 DropPath가 무엇이며, timm이라는 라이브러리는 무엇인지 그 내부에 어떻게 구현되어 있는지를 기록해두려고 합니다.

😀 DropPath란?(= Stochastic Depth)

DropPath란 Dropout의 이름과 유사하게 기능도 유사한 역할을 수행합니다.

이 개념은 Deep Networks with Stochastic Depth에서 등장한 개념으로 Residual connection의 구조를 가진 모델에서 사용할 수 있는 기능입니다. 논문에서는 이를 Stochastic Depth라 언급하기도 합니다.

위 그림에서 DropPath가 수행하고자 하는 것은 랜덤한 확률에 따라 \(f_l(H_{l-1})\)을 생략하는 것입니다.

즉, 모델의 Output이 랜덤한 확률에 따라 \(f(x)+x\)가 될 수도 있고, \(x\)가 될 수도 있는 것입니다. 그리고, 이것은 우리가 배치 단위로 학습할 때, 배치 내의 샘플 별로 랜덤한 확률에 의해 적용됩니다.

그러면, 이를 통해 좋아지는 것이 무엇이냐?

1️⃣ Training time savings

DropPath가 적용이 되면, 기존 Block에서 Residual connection 구조만 남게 되는 것이기 때문에 네트워크의 길이가 짧아집니다. 이에 따라, 학습 시간이 줄어들게 됩니다.

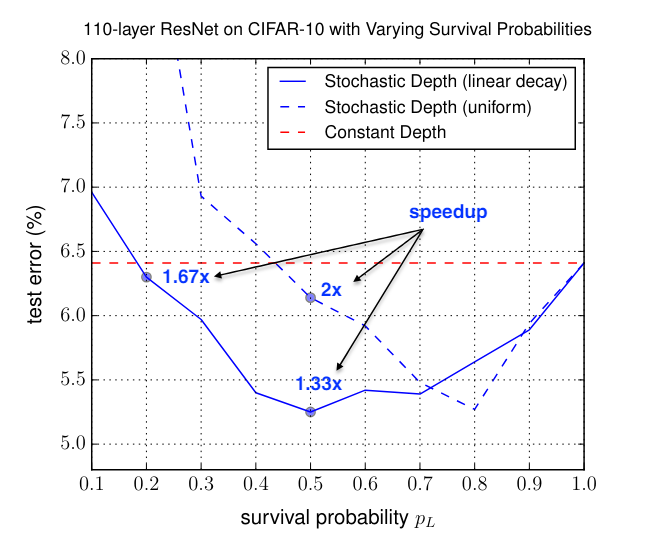

위 논문에서 실험한 결과로, Survival probability가 0.5일 때는 학습 시간이 25% 정도로 줄었습니다. 그리고, 0.5일 때 모델의 성능이 제일 Optimal 했습니다.

또한, Survival probability가 0.2일 때는 성능 자체는 DropPath를 사용하지 않은 것과 비슷한데, 학습 시간은 40%로 확 줄었습니다.

2️⃣ Implict model ensemble

그리고, 모델 내에 \(L\)개의 Residual Block이 있다고 했을 때, DropPath를 적용시키면 서로 다른 \(2^L\)개의 모델을 학습시키는 것과 같습니다. (왜냐하면, 적용하느냐 안 하느냐의 경우의 수가 있어서) 즉, 앙상블의 효과를 가져온다는 것입니다.

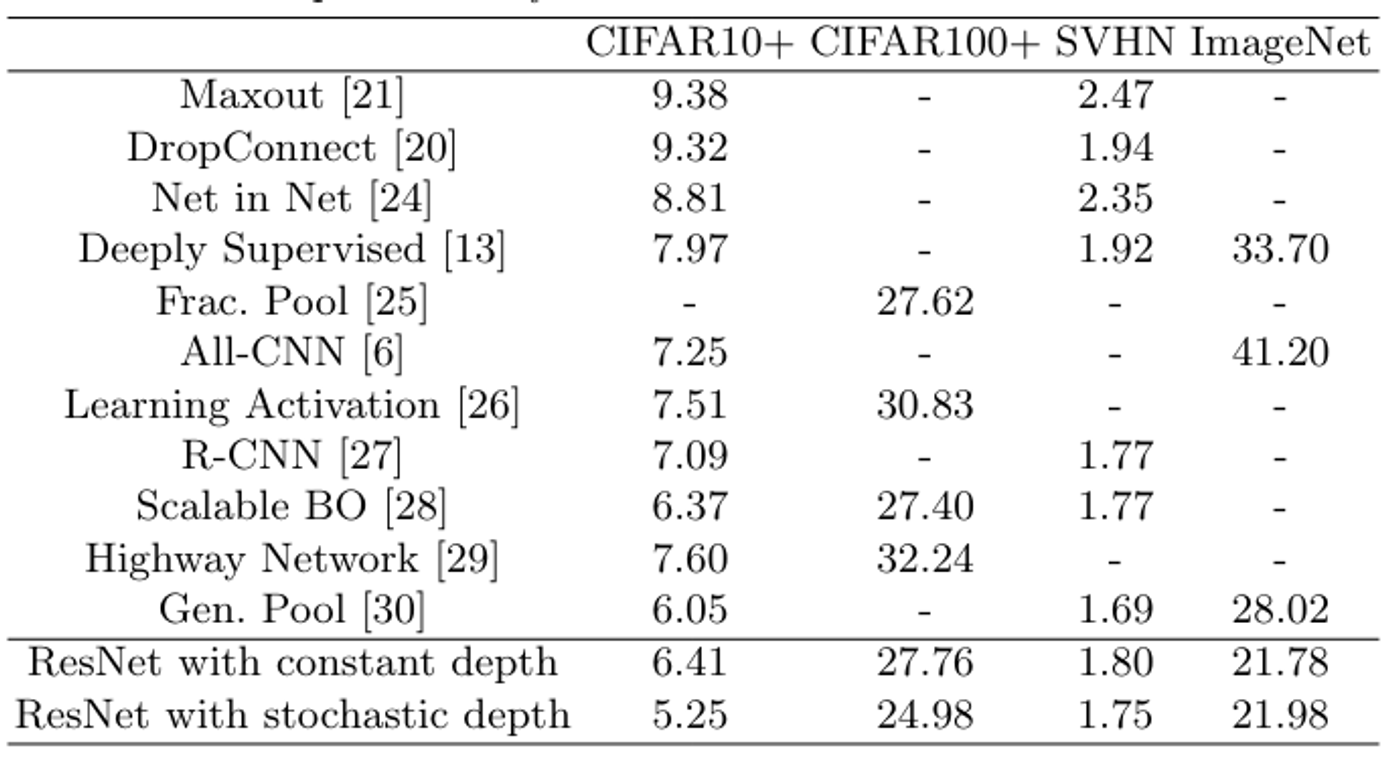

이를 증명할 수 있는 결과로 벤치마크 데이터셋을 학습시켜 Test error를 여러 연구들과 비교한 결과, 확실히 더 성능이 좋음을 보였습니다.

😀 timm 내 오픈소스 분석 & 배운 점

timm 라이브러리는 Hugging face에서 이미지 모델(pre-train된 모델 포함)을 제공하는 라이브러리입니다. 뿐만 아니라 여러 기능을 수행하는 레이어도 오픈 소스로 제공을 하고 있습니다. (timm.models.layers)

내부에 구현되어있는 DropPath 클래스에서 구현적으로 배운 게 많아서 이에 대해서 정리를 해보려고 합니다. 전부를 적기에는 다소 글이 길어지기 때문에 내용들은 모두 주석으로 적어두었습니다.

https://github.com/huggingface/pytorch-image-models/blob/main/timm/layers/drop.py#L150

pytorch-image-models/timm/layers/drop.py at main · huggingface/pytorch-image-models

PyTorch image models, scripts, pretrained weights -- ResNet, ResNeXT, EfficientNet, NFNet, Vision Transformer (ViT), MobileNet-V3/V2, RegNet, DPN, CSPNet, Swin Transformer, MaxViT, CoAtNet, ConvNeX...

github.com

위 오픈소스의 150번 줄부터 주석을 적어두었습니다.

def drop_path(x, drop_prob: float = 0., training: bool= False, scale_by_keep: bool = True):

r"""

이 함수는 f(x) + x에서 x에 적용되는 함수이다.

"""

if drop_prob == 0. or not training:

return x

keep_prob = 1 - drop_prob

# (1) 아래의 shape이 가지는 의미

"""

Batch를 고려한 (x.shape[0],)를 제외하고 본다면,

(1,) * (x.ndim - 1)만 보면 되는데

이는 원래 sample 자체의 ndim만큼 tensor의 shape을 갖도록 하는 것이다.

즉, (Batch Size, 1, 1, ..., 1)의 shape이 된다는 의미인데

이는 텐서의 모든 원소들에 대해 연산을 할 수 있도록 의도적으로 이런

shape을 갖도록 하는 것이다. = Broading Semantics 사용

-> 이렇게 해야 Sample마다 독립적으로 Drop이 가능하다.

"""

shape = (x.shape[0],) + (1,) * (x.ndim - 1)

# (2) Tensor.new_empty(size)

"""

https://pytorch.org/docs/stable/generated/torch.Tensor.new_empty.html

size만큼의 uninitialized data로 텐서를 채운다.

원본의 텐서와 같은 torch.dtype, torch.device를 갖게 된다.

그래서 이 메서드의 용도는 아마 원본 텐서와 동일한 상태(dtype, device)를

가지도록 하는 것으로 추측하고 있다.

new_empty를 쓴 건 bernouli_()를 통해서 새로운 값으로 채울 것이라

어떤 특정 값으로 초기화하는 것은 메모리 비효율적이기 때문이다.

"""

# (3) Tensor.bernoulli_()

"""

https://pytorch.org/docs/stable/generated/torch.Tensor.bernoulli_.html

텐서 각각의 element들이 베르누이 시행을 통해서 나온 결과다.

random_tensor에 할당할 거면, 굳이 inplace operation으로 사용했어야 했나?

이것은 inplace operation으로 해야 Tensor의 mutability가 보장되어

새로운 메모리를 할당하지 않기 때문에 효율적인 관점에서 bernoulli()가 아닌

bernoulli_()가 사용되었다고 추측한다.

"""

random_tensor = x.new_empty(shape).bernoulli_(keep_prob)

if keep_prob > 0.0 and scale_by_keep:

"""

(나의 추측)

논문에서 scale_by_keep의 목적은 찾지는 못 하였지만,

아마 수식상으로 봤을 때, keep_prob에 의해 사라지는 sample들에 대해서

모델은 모델 내부 특정 레이어들이 학습하게 되는 데이터의 수가 줄어드는 것이라

판단하게 된다.

이 때문에 레이어의 관점에서 Drop이 되는 샘플에 대해 개수가

보장이 되도록 하기 위해서 Drop이 되지 않는 샘플에 대해 가중치를 부여한다.

-> 이는 keep_prob의 역수로 보장한다.

이를 통해, 없어진 샘플에 대해 다른 샘플의 가중치 더 부여함으로써

학습할 데이터의 수를 보존하는 효과를 가지도록 한다.

"""

random_tensor.div_(keep_prob)

return x * random_tensor

class DropPath(nn.Module):

def __init__(self, drop_prob: float = 0., scale_by_keep: bool = True):

super(DropPath, self).__init__()

self.drop_prob = drop_prob

self.scale_by_keep = scale_by_keep

def forward(self, x):

return drop_path(x, self.drop_prob, self.training, self.scale_by_keep)📄 알게된 것

다양한 메서드의 사용입니다. 특히, 텐서 내장 메서드를 많이 사용했으며, 메서드 이름 끝에 '_'(언더 바) 유무에 따라 inplace operation인지 아닌지가 달라지게 됩니다. 메모리의 관점(Mutable, Immutable)에서 왜 inplace operation을 사용했는지 알아보는 것도 재밌었습니다. div_(), bernoulli_()

또한, Tuple의 연산 규칙을 사용하여 새로운 텐서의 Shape을 만드는 과정이 배울 점이라고 느꼈습니다. 이 과정에서 이전에 배운 Broadcasting Semantics를 활용하여 각 샘플에 DropPath를 적용하는 것도 인상적이었습니다.

📂 Reference

https://arxiv.org/abs/1603.09382

Deep Networks with Stochastic Depth

Very deep convolutional networks with hundreds of layers have led to significant reductions in error on competitive benchmarks. Although the unmatched expressiveness of the many layers can be highly desirable at test time, training very deep networks comes

arxiv.org

https://github.com/huggingface/pytorch-image-models/blob/main/timm/layers/drop.py#L150

pytorch-image-models/timm/layers/drop.py at main · huggingface/pytorch-image-models

PyTorch image models, scripts, pretrained weights -- ResNet, ResNeXT, EfficientNet, NFNet, Vision Transformer (ViT), MobileNet-V3/V2, RegNet, DPN, CSPNet, Swin Transformer, MaxViT, CoAtNet, ConvNeX...

github.com

https://pytorch.org/docs/stable/generated/torch.Tensor.new_tensor.html

torch.Tensor.new_tensor — PyTorch 2.3 documentation

Shortcuts

pytorch.org

https://pytorch.org/docs/stable/generated/torch.Tensor.bernoulli_.html

torch.Tensor.bernoulli_ — PyTorch 2.3 documentation

Shortcuts

pytorch.org

'Code about AI > PyTorch' 카테고리의 다른 글

| DataLoader의 collate_fn, 서로 다른 샘플의 크기를 하나의 배치로 묶는 방법 (0) | 2024.07.26 |

|---|---|

| torch.where(), 근데 이제 loss를 만들 때 많이 곁들인 (0) | 2024.07.23 |

| Tensor는 서로 다른 ndim에 대해서 어떻게 연산할까? (Broadcasting Semantics) (0) | 2024.05.04 |

| nn.Parameter(), 이걸 써야 하는 이유가 뭘까? (tensor와 명백하게 다른 점) (3) | 2024.04.29 |

| x.clone()은 정말 Residual Connection을 할까? (Memory 공유, Immutability) (0) | 2024.04.27 |